Why it's better to use PostgreSQL

SQLite root could start experiencing issues once it reaches approximately 1,000,000 revisions and 840,000 files, with a database size of around 650MB and a total data size of about 4.4TB. We consider these parameters borderline limits for SQLite roots. Beyond these thresholds, the SQLite engine struggles to function properly.

PostgreSQL handles large databases much more efficiently, with almost no limit to the root size, and using it requires less processing power. You could see a noticeable performance improvement after converting your largest roots. We recommend switching to PostgreSQL for all new roots by default and converting all existing roots to PostgreSQL. Please note that there is no need to migrate/convert the deleted/removed roots.

Starting with Private Cloud 3.6.20, new Private Cloud installations come with PostgreSQL 12 pre-installed and PostgreSQL set as default root database type. To upgrade an existing installation, follow these steps: Upgrading PostgreSQL on Private Cloud from 9.x to 12.x.

Create a new PostgreSQL roots database store

To use PostgreSQL for your roots, you need to create a compatible database store.

A new Postgres roots database is created using the db_mgr utility included with Private Cloudserver (default location \Anchor Server\bin). This utility is used for creating all Anchor database types & the Postgres roots database is no exception. The type of the database is called roots. Check db_mgr command-line options for more detailed information.

There are several command-line parameters that are specific to this operation, namely those starting with --rdb-. You most certainly need to specify the new database name, username, and password. You very likely also want to specify a public hostname for the database server.

--rdb-user

--rdb-password

--rdb-host

--rdb-port

--rdb-dbname

Example invocation:

db_mgr -t roots -a create --config <path-to-server-config-ini> --rdb-user=postgres --rdb-password=XXXXXXXX --rdb-host=db_server1 --rdb-port=5432 --rdb-dbname=roots_db0

|

This will do the following:

-

Create a new database (named

roots_db0) onPostgresserver running on hostdb_server1, port5432with provided credentials (postgres,XXXXXXXX). -

Register this newly created root store into the target Anchor environment's

portaldatabase

Any already running server processes will not automatically use this store until they are restarted or explicitly told to reload their store configuration using the HTTP API request:

| http://<IP>:<PORT>/system/load_stores/ |

Take parameters from C:\Anchor Server\conf\config.ini, <IP> from parameter http_host,<PORT> from parameter http_port. Issue the request using the browser directly on the app server itself and make sure the trailing slash is present at the end of the URL. Without slash it will fail with "Endpoint not found". This request should be issued on all app servers if there are multiple ones.

If for some reason you only want to create a schema, without registering the corresponding rootstore in the portal database, you need to also pass --rdb-no-register command-line option in db_mgr invocation. If needed, such a database can then be registered manually in the portal database later on by adding an appropriate record to the root_db_location database table (allowed status values are available, disabled & read only, while the rest the columns should be self-explanatory). Note though that it should be empty at the time or any roots with metadata stored in it should also be registered in the same portal database (and details on that are much too involved to get into here).

Make PostgreSQL the default database type

Run this to enable PostgreSQL by default for all new roots created in the specified organization:

"C:\Anchor Server\penv\Scripts\python.exe" "C:\Anchor Server\web\manage.py" features enable pgroots <org_id>

|

If you use 1 for<org_id>, this will enable Postgres by default for all organizations in your environment.

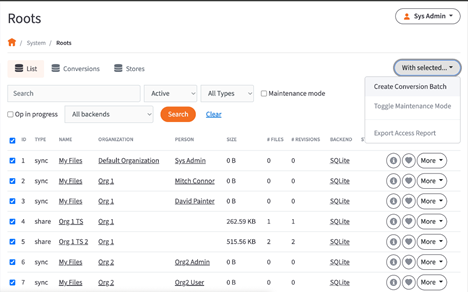

Review roots and their database types

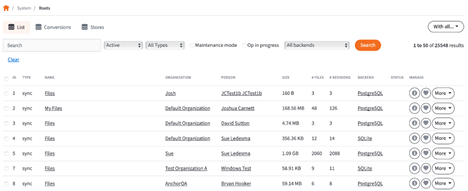

As system admin, go to https://hostname/system/roots. You will see a list of all your roots and their database types, and whether each root is SQLite or PostgreSQL(column "Backend").

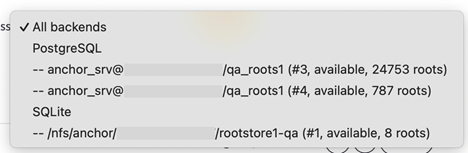

You can also filter out by root storages and see how many roots you have in a given database type (backend).

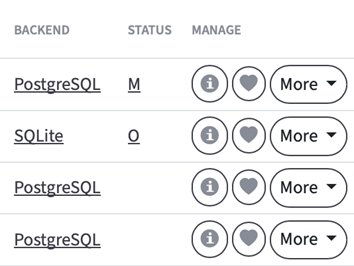

In the "Status" column, you can see if the root is in maintenance mode (letter "M") or if it's undergoing a conversion or another operation (letter "O"). Empty cell means the root is working normally.

Reconfigure your Postgres server

When the Anchor Server is running in SQLite mode, the number of connections to the Postgres server is minimal. But when it works with Postgres roots, it creates new SQL connections for each client that, in parallel with others, is trying to sync up or down. So if 50 client machines are currently online and sending requests at the same time, you need +50 connections to handle that at a peak times.

This number of allowed connections is limited by the parameter max_connections in the main Postgres server configuration file, postgresql.conf. This file is typically located at %postgresql_dir%\data. Here %postgresql_dir% (for example, C:\Program Files\PostgreSQL\11) is the folder that PostgreSQL was installed in.

The default value is typically 100 connections. Just increasing max_connections alone is not enough. You also need to increase shared_buffers parameter to attribute for an increased number of connections. This parameter determines how much memory is dedicated to PostgreSQL to use for caching data. On Windows, the useful range for this parameter is from 64MB to 512MB.

For example, your configuration before the changes could look like this:

max_connections = 100

shared_buffers = 24MB

If you want to increase the number of connections to 300, you have to modify the shared buffers accordingly:

max_connections = 300

shared_buffers = 80MB

Before increasing your connection count you might need to determine if you need to scale up your Postgres server deployment as a whole. If you are running the minimalistic configuration which has 2-4Gbs of RAM and has everything on one single server (Postgres and Anchor Server all on the same instance) you will absolutely have to upgrade it to 8-16Gb of RAM, or even better to move the Postgres server to the separate individual machine or VM.

As each connection consumes RAM, you should be looking to minimize their use. Increasing the number of connections doesn't scale well as you can't endlessly increase the limits of connections for Postgres. So if you have a deployment that does need a large number of connections from multiple Anchor Server nodes and/or serving more than a couple of hundred of active subscribed machines, then consider using a tool such as pg_bouncer which can pool connections for you. We're using it on SaaS with very good results. Such a solution would be a better option than increasing the number of allowed connections all the time.

Convert roots

You might want to run root conversions outside of working hours. All types of conversions temporarily place the root in maintenance mode, making it inaccessible to users, and disable the maintenance mode once the conversion is complete. For most roots, the conversion shouldn't take more than 5 minutes.

Convert a single root using Web UI

You can convert individual roots using the Web interface.

Method1. As system admin, go to https://hostname/roots/conversion/1/, where 1 is the top-level organization id and can be changed to list only roots belonging to some specific organization.![]()

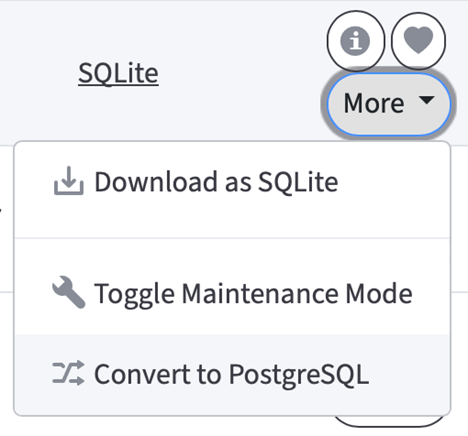

Method 2. Alternatively, you can do a conversion from the list of roots https://hostname/system/roots. Search for the root you need, confirm it's using SQLite and click More -> Convert to PostgreSQL.

Note: We currently don't recommend this method. While it works, it could throw an error "Failed to start root conversion", misleading the administrator. If you face this error, don't repeat the conversion attempt but rather check the "Status" and "Backend" columns for this root and wait a few minutes, in most cases the conversion will finish successfully.

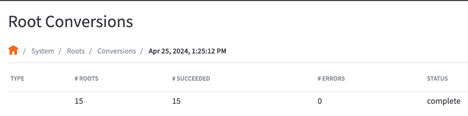

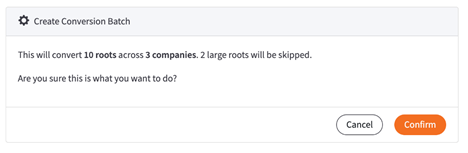

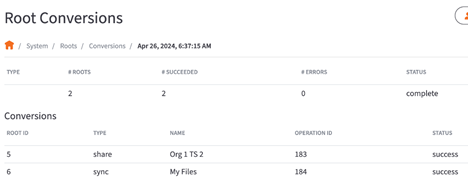

Batch-convert roots

Batch conversion is available to system admins at https://hostname/system/roots/conversions.

There are three different methods to batch-convert roots: Randomly, Company IDs orRoot IDs.

We recommend not converting all roots at once. Instead, convert no more than several hundreds of roots in one go. It's safer from the perspective of the server resource management.

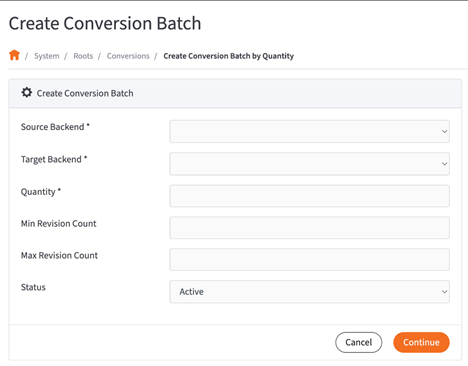

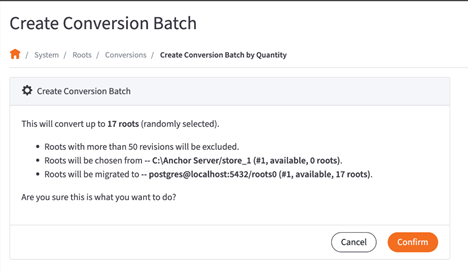

Create Conversion Batch by Quantity (Randomly)

https://hostname/system/roots/conversions/create/qty

This method allows the admin to select a number of roots to convert, and root IDs for conversion will be chosen randomly.

Required Fields

- Source Backend - Choose between SQLIte and PostgreSQL roots to convert

- Target Backend - What database type the source roots will be converted to

- Quantity - How many random roots will be converted

Not Required Fields

- Min Revision Count - Minimum number of revisions a root has. Currently this option might not take effect. We recommend keeping the field empty.

- Max Revision Count - Maximum number of revisions a root has. If random root is above maximum, it will be skipped

- Status - Status of the root that will be converted. We recommend only using 'Active' or 'Active+Deleted' for this field.

Based on the parameters used, Admin will see how many roots were converted and if there were any errors.

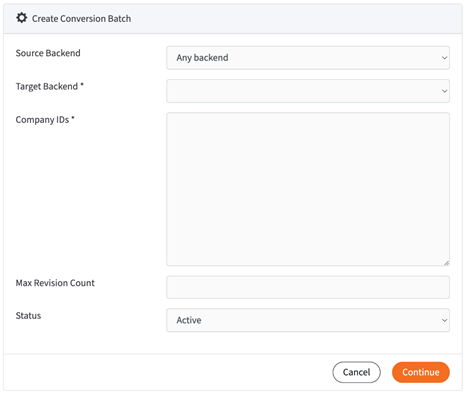

Create Conversion Batch by Company ID

https://hostname/system/roots/conversions/create/company

This method allows the admin to convert all roots of select organizations at once.

Required Fields

- Target Backend - What database type the source roots will be converted to

- Company IDs - Company IDs that will have all roots within them converted to Target Backend (separated by comma). Selecting the parent Company ID will also include child orgs into conversion

Not Required Fields

- Source Backend - Choose between SQLIte and PostgreSQL roots to convert

- Max Revision Count - Maximum number of revisions a root has. If random root is above maximum, it will be skipped

- Status - Status of the root that will be converted. We recommend only using 'Active' or 'Active+Deleted' for this field

Based on the parameters used, Admin will see how many roots were converted and if any were skipped.

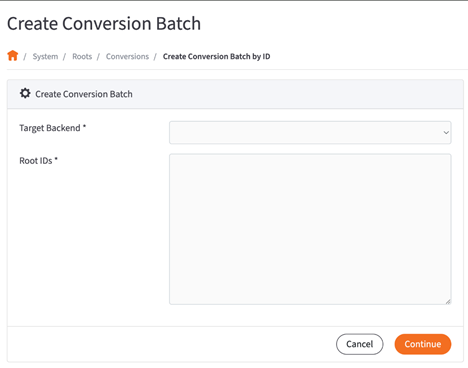

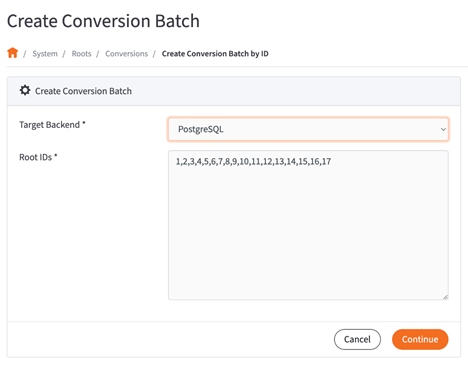

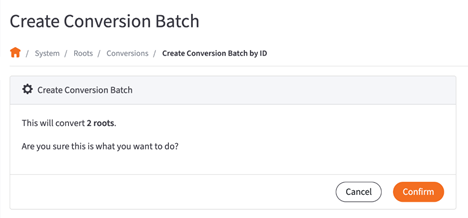

Create Conversion Batch by Root ID

https://hostname/system/roots/conversions/create/ids

This method allows the admin to convert only specific roots by their ID.

Required Fields

- Target Backend - What database type the source roots will be converted to. Currently, please only use the main groups 'PostgreSQL' or 'SQLite' for this field, but not specific database stores

- Root IDs - IDs of roots that will be converted (separated by comma)

From main Roots page on System Console, an Admin can check some or all boxes next to individual roots and then use the 'With Selected' drop down and select Create Batch Conversion. This will navigate admin to Create Conversion Batch by ID and have the field populated with Root IDs of selected roots.

Based on the Root IDs entered, Admin will see that the roots were converted.

Manually convert root from SQLite to PostgreSQL

This is the most manual method among all. It could be useful when you are resolving issues in the database and trying to attempt conversion afterwards.

To convert an existing root metadata database that's backed by a SQLite store to a Postgres store, you should use the sqlite_to_postgres utility that comes with the albatross package.

sqlite_to_postgres will migrate a single root's metadata out of its SQLite database and into the given Postgres roots database. Converting metadata for multiple roots will require invoking this utility multiple times.

sqlite_to_postgres has several options to help out with doing a live conversion, i.e. while the system is online.

· --maintenance-mode - root is placed into a special mode so it won't be accessed by the system at all, not even for read operations. This is the recommended way to do the conversion.

· --create-async-operation - conversion process is associated at the application level with the root. Among other things, this will show the user in the web application that there is an operation on the root currently in progress.

For more detail on sqlite_to_postgres command-line parameters, check the utility's command-line help page.

Here are the command-line parameters that get passed to the conversion utilities.

· SQLite to Postgres

sqlite_to_postgres --config <path-to-config> --root-id <root_id> --log-file <log_file> --use-portal --update-portal --maintenance-mode --create-async-operation |

Address conversion failures

How to restore root if conversion fails

The conversion mechanism is safe: it doesn't destroy data, it only makes a copy of the root in another database type and switches to it once conversion is finished. In case with SQLite to PostgreSQL conversion, the original root will remain in place with a name <root_id>.db.processed - it can be renamed back if needed.

If conversion fails, the UI will throw an error, abort the conversion process, disable maintenance mode and make the root operational again. The root will keep using the old database type.

In a rare case when conversion process crashes on the server machine, the root will remain in the original database type and in maintenance mode, not available to users.

If you waited more than 30 minutes and conversion hasn't complete, at first, make sure that the process is indeed crashed or hung up:

-

In the list of processes, there shouldn't be an active process

sqlite_to_postgreswith a root number as an argument. -

If the process is still there, make sure the conversion log file located in

db_conversionfolder inside the main log folder is no longer being written. Then, kill this process.

To make the root operational again, you need to disable maintenance mode from the list of roots using More -> Toggle Maintenance Mode. This will make the root operational again.

How to analyze conversion failures

Conversion utilities may fail for various reasons. The log file should contain all the information needed to determine the cause of the error.

When running the conversion utilities directly, you specify the log file path on the command-line.

When the conversion utilities are run using the web (i.e. using albatross HTTP APIs), the log file location is:

-

logs/db_conversionfolder (under the main installation folder)

and the log file is named as one of the following:

convert_sqlite_to_pg_root_id_<root-id>.logconvert_pg_to_sqlite_root_id_<root-id>.log

If the log messages are unclear, you can provide these logs to support to investigate.

How to fix invalid UTF8 symbols preventing root conversion

In an attempt to convert a root, you may encounter issues with a conversion like this:

023-09-12 07:59:52.408985|ERRR||copy operation failed status: 7 sqlstate: '22021' severity: 'ERROR' msg: 'invalid byte sequence for encoding "UTF8": 0xed 0xa0 0xbd' detail: <none> query: <none>Follow the instructions below to fix these issues.

Download and extract the bundle to SQLite tools for Windows

It can be picked up on the SQLite site: https://sqlite.org/download.html

Latest package at the time of writing this manual: https://sqlite.org/2024/sqlite-tools-win-x64-3450300.zip

Dump the database to the SQL text file

D:\Tools>sqlite3.exe D:\rootstore1\roots\0AF\000AF2DF.db

.output D:\rootstore1\roots\0AF\dump.sql

.dump

Use the attached script (fix_utf8_dump.py) to process the dump and fix it

D:\Tools>"C:\Anchor Server\penv\Scripts\python.exe" fix_utf8_dump.py --help

usage: fix_utf8_dump.py [-h] -i INPUT -o OUTPUT [-d]

Utility to remove invalid UTF-8 byte sequences from DB dumps

optional arguments:

-h, --help show this help message and exit

-i INPUT, --input INPUT

Input DB dump file

-o OUTPUT, --output OUTPUT

Output DB dump file

-d, --dryrun Dry-Run - collect orgs information only

Example:

D:\Tools>"C:\Anchor Server\penv\Scripts\python.exe" fix_utf8_dump.py -i test_dump.sql -o check.sql -d

Found line with incorrect UTF8 sequence, will fix:

--------

ORIGINAL: b'INSERT INTO "file" VALUES(717535,56151696,\'Fwd_ New Video\xed\xa0\xbd\xed\xb1\x8d_ How to make contours with the ROCK Surveyor APP.msg\',24890736,1615405656,1,1615406247,NULL,NULL);\n'

FIXED : INSERT INTO "file" VALUES(717535,56151696,'Fwd_ New Video _ How to make contours with the ROCK Surveyor APP.msg',24890736,1615405656,1,1615406247,NULL,NULL);

--------

Found line with incorrect UTF8 sequence, will fix:

--------

ORIGINAL: b'INSERT INTO "file" VALUES(717535,56151697,\'Fwd_ \xed\xa0\xbd\xed\xb4\xa5 Tom_ you need to meet the ROCK Cloud.msg\',24890736,1615405660,1,1615406247,NULL,NULL);\n'

FIXED : INSERT INTO "file" VALUES(717535,56151697,'Fwd_ Tom_ you need to meet the ROCK Cloud.msg',24890736,1615405660,1,1615406247,NULL,NULL);

--------

Found line with incorrect UTF8 sequence, will fix:

--------

ORIGINAL: b'INSERT INTO "event" VALUES(717535,93982804,0,1,1615405659,56151696,NULL,1,NULL,NULL,4656870,NULL,NULL,0,0,24890736,\'Fwd_ New Video\xed\xa0\xbd\xed\xb1\x8d_ How to make contours with the ROCK Surveyor APP.msg\');\n'

FIXED : INSERT INTO "event" VALUES(717535,93982804,0,1,1615405659,56151696,NULL,1,NULL,NULL,4656870,NULL,NULL,0,0,24890736,'Fwd_ New Video _ How to make contours with the ROCK Surveyor APP.msg');

--------

Found line with incorrect UTF8 sequence, will fix:

--------

ORIGINAL: b'INSERT INTO "event" VALUES(717535,93982805,0,1,1615405663,56151697,NULL,1,NULL,NULL,4656870,NULL,NULL,0,0,24890736,\'Fwd_ \xed\xa0\xbd\xed\xb4\xa5 Tom_ you need to meet the ROCK Cloud.msg\');\n'

FIXED : INSERT INTO "event" VALUES(717535,93982805,0,1,1615405663,56151697,NULL,1,NULL,NULL,4656870,NULL,NULL,0,0,24890736,'Fwd_ Tom_ you need to meet the ROCK Cloud.msg');

--------

Restore the DB from the dump and check if it works

Don't forget to set DB to maintenance and do a backup like this:

D:\Tools><nul (set/p z=) >fixed.db

D:\Tools>sqlite3.exe fixed.db

.read D:\rootstore1\roots\0AF\dump_fixed.sql

.exit

cd D:\rootstore1\roots\0AF

D:\rootstore1\roots\0AF>ren 000AF2DF.db 000AF2DF.bak_SYNC-17038

D:\rootstore1\roots\0AF>copy D:\Tools\fixed.db 000AF2DF.db

Turn maintenance off and attempt the conversion. It should be successful now.

SUPPORT | 720-204-4500 | 800-352-0248

- Contact Axcient Support at https://partner.axcient.com/login or call 800-352-0248

- Have you tried our Support chat for quick questions?

- Free certification courses are available in the Axcient x360Portal under Training

- Subscribe to Axcient Status page for updates and scheduled maintenance

1789